The technical term "IEEE 802.11" has been used interchangeably with Wi-Fi, however Wi-Fi has become a superset of IEEE 802.11 over the past few years. Wi-Fi is used by over 700 million people, there are over 750,000 hotspots (places with Wi-Fi Internet connectivity) around the world, and about 800 million new Wi-Fi devices every year. Wi-Fi products that complete the Wi-Fi Alliance interoperability certification testing successfully can use the Wi-Fi CERTIFIED designation and trademark.

Not every Wi-Fi device is submitted for certification to the Wi-Fi Alliance. The lack of Wi-Fi certification does not necessarily imply a device is incompatible with Wi-Fi devices/protocols. If it is compliant or partly compatible the Wi-Fi Alliance may not object to its description as a Wi-Fi device though technically only the CERTIFIED designation carries their approval.

Wi-Fi certified and compliant devices are installed in many personal computers, video game consoles, MP3 players, smartphones, printers, digital cameras, and laptop computers.

This article focuses on the certification and approvals process and the general growth of wireless networking under the Wi-Fi Alliance certified protocols. For more on the technologies see the appropriate articles with IEEE, ANSI, IETF , W3 and ITU prefixes (acronyms for the accredited standards organizations that have created formal technology standards for the protocols by which devices communicate). Non-Wi-Fi-Alliance wireless technologies intended for fixed points such as Motorola Canopy are usually described as fixed wireless. Non-Wi-Fi-Alliance wireless technologies intended for mobile use are usually described as 3G, 4G or 5G reflecting their origins and promotion by telephone/cell companies.

Wi-Fi certification

Wi-Fi technology builds on IEEE 802.11 standards. The IEEE develops and publishes some of these standards, but does not test equipment for compliance with them. The non-profit Wi-Fi Alliance formed in 1999 to fill this void — to establish and enforce standards for interoperability and backward compatibility, and to promote wireless local-area-network technology. As of 2010 the Wi-Fi Alliance consisted of more than 375 companies from around the world. Manufacturers with membership in the Wi-Fi Alliance, whose products pass the certification process, gain the right to mark those products with the Wi-Fi logo.

Specifically, the certification process requires conformance to the IEEE 802.11 radio standards, the WPA and WPA2 security standards, and the EAP authentication standard. Certification may optionally include tests of IEEE 802.11 draft standards, interaction with cellular-phone technology in converged devices, and features relating to security set-up, multimedia, and power-saving.

Most recently, a new security standard, Wi-Fi Protected Setup, allows embedded devices with limited graphical user interface to connect to the Internet with ease. Wi-Fi Protected Setup has 2 configurations: The Push Button configuration and the PIN configuration. These embedded devices are also called The Internet of Things and are low-power, battery-operated embedded systems. A number of Wi-Fi manufacturers design chips and modules for embedded Wi-Fi, such as GainSpan.

The name Wi-Fi

The term Wi-Fi suggests Wireless Fidelity, resembling the long-established audio-equipment classification term high fidelity (in use since the 1930s) or Hi-Fi (used since 1950). Even the Wi-Fi Alliance itself has often used the phrase Wireless Fidelity in its press releases and documents; the term also appears in a white paper on Wi-Fi from ITAA. However, based on Phil Belanger's statement, the term Wi-Fi was never supposed to mean anything at all.

The term Wi-Fi, first used commercially in August 1999, was coined by a brand-consulting firm called Interbrand Corporation that the Alliance had hired to determine a name that was "a little catchier than 'IEEE 802.11b Direct Sequence'". Belanger also stated that Interbrand invented Wi-Fi as a play on words with Hi-Fi, and also created the yin-yang-style Wi-Fi logo.

The Wi-Fi Alliance initially used an advertising slogan for Wi-Fi, "The Standard for Wireless Fidelity", but later removed the phrase from their marketing. Despite this, some documents from the Alliance dated 2003 and 2004 still contain the term Wireless Fidelity. There was no official statement related to the dropping of the term.

The yin-yang logo indicates the certification of a product for interoperability.

Internet access

A roof-mounted Wi-Fi antenna

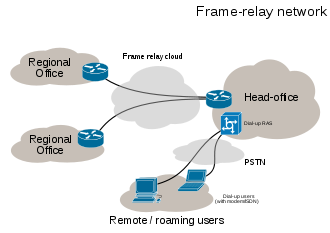

A Wi-Fi enabled device such as a personal computer, video game console, smartphone or digital audio player can connect to the Internet when within range of a wireless network connected to the Internet. The coverage of one or more (interconnected) access points — called hotspots — can comprise an area as small as a few rooms or as large as many square miles. Coverage in the larger area may depend on a group of access points with overlapping coverage. Wi-Fi technology has been used in wireless mesh networks, for example, in London, UK.In addition to private use in homes and offices, Wi-Fi can provide public access at Wi-Fi hotspots provided either free-of-charge or to subscribers to various commercial services. Organizations and businesses - such as those running airports, hotels and restaurants - often provide free-use hotspots to attract or assist clients. Enthusiasts or authorities who wish to provide services or even to promote business in selected areas sometimes provide free Wi-Fi access. As of 2008 more than 300 metropolitan-wide Wi-Fi (Muni-Fi) projects had started. As of 2010 the Czech Republic had 1150 Wi-Fi based wireless Internet service providers.

Routers that incorporate a digital subscriber line modem or a cable modem and a Wi-Fi access point, often set up in homes and other premises, can provide Internet access and internetworking to all devices connected (wirelessly or by cable) to them. With the emergence of MiFi and WiBro (a portable Wi-Fi router) people can easily create their own Wi-Fi hotspots that connect to Internet via cellular networks. Now many mobile phones can also create wireless connections via tethering on iPhone, Android, Symbian, and WinMo.

One can also connect Wi-Fi devices in ad-hoc mode for client-to-client connections without a router. Wi-Fi also connects places that would traditionally not have network access, for example bathrooms, kitchens and garden sheds.

City-wide Wi-Fi

Further information: Municipal wireless network

An outdoor Wi-Fi access point in Minneapolis

An outdoor Wi-Fi access point in Toronto

In May, 2010, London, UK Mayor Boris Johnson pledged London-wide Wi-Fi by 2012. Both the City of London, UK and Islington already have extensive outdoor Wi-Fi coverage.

Campus-wide Wi-Fi

Carnegie Mellon University built the first wireless Internet network in the world at their Pittsburgh campus in 1994, long before Wi-Fi branding originated in 1999. Many traditional college campuses provide at least partial wireless Wi-Fi Internet coverage.

Drexel University in Philadelphia made history by becoming the United State's first major university to offer completely wireless Internet access across the entire campus in 2000.

Direct computer-to-computer communications

Wi-Fi also allows communications directly from one computer to another without the involvement of an access point. This is called the ad-hoc mode of Wi-Fi transmission. This wireless ad-hoc network mode has proven popular with multiplayer handheld game consoles, such as the Nintendo DS, digital cameras, and other consumer electronics devices.

Similarly, the Wi-Fi Alliance promotes a pending specification called Wi-Fi Direct for file transfers and media sharing through a new discovery- and security-methodology.

Future directions

As of 2010 Wi-Fi technology has spread widely within business and industrial sites. In business environments, just like other environments, increasing the number of Wi-Fi access points provides network redundancy, support for fast roaming and increased overall network-capacity by using more channels or by defining smaller cells. Wi-Fi enables wireless voice-applications (VoWLAN or WVOIP). Over the years, Wi-Fi implementations have moved toward "thin" access points, with more of the network intelligence housed in a centralized network appliance, relegating individual access points to the role of "dumb" transceivers. Outdoor applications may utilize mesh topologies.

.svg/200px-USB_pipes_and_endpoints_(en).svg.png)