Android is a mobile operating system initially developed by Android Inc. Android was bought by Google in 2005. Android is based upon a modified version of the Linux kernel. Google and other members of the Open Handset Alliance collaborated to develop and release Android to the world. The Android Open Source Project (AOSP) is tasked with the maintenance and further development of Android. Unit sales for Android OS smartphones ranked first among all smartphone OS handsets sold in the U.S. in the second and third quarters of 2010, with a third quarter market share of 43.6%.

Android has a large community of developers writing application programs ("apps") that extend the functionality of the devices. There are currently over 200,000 apps available for Android.Android Market is the online app store run by Google, though apps can be downloaded from third party sites (except on AT&T, which disallows this). Developers write in the Java language, controlling the device via Google-developed Java libraries.

The unveiling of the Android distribution on the 5th of November 2007 was announced with the founding of the Open Handset Alliance, a consortium of 79 hardware, software, and telecom companies devoted to advancing open standards for mobile devices. Google released most of the Android code under the Apache License, a free software and open source license.

The Android operating system software stack consists of Java applications running on a Java based object oriented application framework on top of Java core libraries running on a Dalvik virtual machine featuring JIT compilation. Libraries written in C include the surface manager, OpenCore media framework, SQLite relational database management system, OpenGL ES 2.0 3D graphics API, WebKit layout engine, SGL graphics engine, SSL, and Bionic libc. The Android operating system consists of 12 million lines of code including 3 million lines of XML, 2.8 million lines of C, 2.1 million lines of Java, and 1.75 million lines of C++.

History

Acquisition by Google

In July 2005, Google acquired Android Inc., a small startup company based in Palo Alto, California, USA. Android's co-founders who went to work at Google included Andy Rubin (co-founder of Danger), Rich Miner (co-founder of Wildfire Communications, Inc.), Nick Sears (once VP at T-Mobile), and Chris White (headed design and interface development at WebTV). At the time, little was known about the functions of Android, Inc. other than that they made software for mobile phones. This began rumors that Google was planning to enter the mobile phone market.

At Google, the team led by Rubin developed a mobile device platform powered by the Linux kernel which they marketed to handset makers and carriers on the premise of providing a flexible, upgradable system. It was reported that Google had already lined up a series of hardware component and software partners and signaled to carriers that it was open to various degrees of cooperation on their part. More speculation that Google's Android would be entering the mobile-phone market came in December 2006. Reports from the BBC and The Wall Street Journal noted that Google wanted its search and applications on mobile phones and it was working hard to deliver that. Print and online media outlets soon reported rumors that Google was developing a Google-branded handset. More speculation followed reporting that as Google was defining technical specifications, it was showing prototypes to cell phone manufacturers and network operators.

In September 2007, InformationWeek covered an Evalueserve study reporting that Google had filed several patent applications in the area of mobile telephony.

Open Handset Alliance

"Today's announcement is more ambitious than any single 'Google Phone' that the press has been speculating about over the past few weeks. Our vision is that the powerful platform we're unveiling will power thousands of different phone models."

Eric Schmidt, Google Chairman/CEO

On the 5th of November 2007 the Open Handset Alliance, a consortium of several companies which include Texas Instruments, Broadcom Corporation, Google, HTC, Intel, LG, Marvell Technology Group, Motorola, Nvidia, Qualcomm, Samsung Electronics, Sprint Nextel and T-Mobile was unveiled with the goal to develop open standards for mobile devices. Along with the formation of the Open Handset Alliance, the OHA also unveiled their first product, Android, a mobile device platform built on the Linux kernel version 2.6.

On 9 December 2008, it was announced that 14 new members would be joining the Android Project, including PacketVideo, ARM Holdings, Atheros Communications, Asustek Computer Inc, Garmin Ltd, Softbank, Sony Ericsson, Toshiba Corp, and Vodafone Group Plc.

Licensing

With the exception of brief update periods, Android has been available under a free software / open source license since 21 October 2008. Google published the entire source code (including network and telephony stacks) under an Apache License.

Tuesday, December 21, 2010

Monday, December 20, 2010

iPhone

The iPhone (pronounced /ˈaɪfoʊn/ EYE-fohn) is a line of Internet and multimedia-enabled smartphones designed and marketed by Apple Inc. The first iPhone was introduced on January 9, 2007.

An iPhone functions as a camera phone, including text messaging and visual voicemail, a portable media player, and an Internet client, with e-mail, web browsing, and Wi-Fi connectivity. The user interface is built around the device's multi-touch screen, including a virtual keyboard rather than a physical one. Third-party applications are available from the App Store, which launched in mid-2008 and now has well over 300,000 "apps" approved by Apple. These apps have diverse functionalities, including games, reference, GPS navigation, social networking, security and advertising for television shows, films, and celebrities.

There are four generations of iPhone models, and they were accompanied by four major releases of iOS (formerly iPhone OS). The original iPhone established design precedents like screen size and button placement that have persisted through all models. The iPhone 3G added 3G cellular network capabilities and A-GPS location. The iPhone 3GS added a compass, faster processor, and higher resolution camera, including video. The iPhone 4 has two cameras for FaceTime video calling and a higher-resolution display. It was released on June 24, 2010.

History and availability

Development of the iPhone began with Apple CEO Steve Jobs' direction that Apple engineers investigate touchscreens. Apple created the device during a secretive and unprecedented collaboration with AT&T Mobility—Cingular Wireless at the time—at an estimated development cost of US$150 million over thirty months. Apple rejected the "design by committee" approach that had yielded the Motorola ROKR E1, a largely unsuccessful collaboration with Motorola. Instead, Cingular gave Apple the liberty to develop the iPhone's hardware and software in-house.

Jobs unveiled the iPhone to the public on January 9, 2007 at Macworld 2007. Apple was required to file for operating permits with the FCC, but since such filings are made available to the public, the announcement came months before the iPhone had received approval. The iPhone went on sale in the United States on June 29, 2007, at 6:00 pm local time, while hundreds of customers lined up outside the stores nationwide. The original iPhone was made available in the UK, France, and Germany in November 2007, and Ireland and Austria in the spring of 2008.

On July 11, 2008, Apple released the iPhone 3G in twenty-two countries, including the original six. Apple released the iPhone 3G in upwards of eighty countries and territories. Apple announced the iPhone 3GS on June 8, 2009, along with plans to release it later in June, July, and August, starting with the U.S., Canada and major European countries on June 19. Many would-be users objected to the iPhone's cost, and 40% of users have household incomes over US$100,000. In an attempt to gain a wider market, Apple retained the 8 GB iPhone 3G at a lower price point. When Apple introduced the iPhone 4, the 3GS became the less expensive model. Apple reduced the price several times since the iPhone's release in 2007, at which time an 8 GB iPhone sold for $599. An iPhone 3GS with the same capacity now costs $99. However, these numbers are misleading, since all iPhone units sold through AT&T require a two-year contract (costing several hundred dollars), and a SIM lock.

Apple sold 6.1 million original iPhone units over five quarters. The sales has been growing steadily thereafter, by the end of fiscal year 2010, a total of 73.5 million iPhones were sold. Sales in Q4 2008 surpassed temporarily those of RIM's BlackBerry sales of 5.2 million units, which made Apple briefly the third largest mobile phone manufacturer by revenue, after Nokia and Samsung. Approximately 6.4 million iPhones are active in the U.S. alone. While iPhone sales constitute a significant portion of Apple's revenue, some of this income is deferred.

The back of the original iPhone was made of aluminum with a black plastic accent. The iPhone 3G and 3GS feature a full plastic back to increase the strength of the GSM signal. The iPhone 3G was available in an 8 GB black model, or a black or white option for the 16 GB model. They both are now discontinued. The iPhone 3GS was available in both colors, regardless of storage capacity. The white model was discontinued in favor of a black 8 GB low-end model. The iPhone 4 has an aluminosilicate glass front and back with a stainless steel edge that serves as the antennae. It is available in black; a white version was announced, but has as of October 2010 not been released.

The iPhone has garnered positive reviews from critics like David Pogue and Walter Mossberg. The iPhone attracts users of all ages, and besides consumer use the iPhone has also been adopted for business purposes.

An iPhone functions as a camera phone, including text messaging and visual voicemail, a portable media player, and an Internet client, with e-mail, web browsing, and Wi-Fi connectivity. The user interface is built around the device's multi-touch screen, including a virtual keyboard rather than a physical one. Third-party applications are available from the App Store, which launched in mid-2008 and now has well over 300,000 "apps" approved by Apple. These apps have diverse functionalities, including games, reference, GPS navigation, social networking, security and advertising for television shows, films, and celebrities.

There are four generations of iPhone models, and they were accompanied by four major releases of iOS (formerly iPhone OS). The original iPhone established design precedents like screen size and button placement that have persisted through all models. The iPhone 3G added 3G cellular network capabilities and A-GPS location. The iPhone 3GS added a compass, faster processor, and higher resolution camera, including video. The iPhone 4 has two cameras for FaceTime video calling and a higher-resolution display. It was released on June 24, 2010.

History and availability

Development of the iPhone began with Apple CEO Steve Jobs' direction that Apple engineers investigate touchscreens. Apple created the device during a secretive and unprecedented collaboration with AT&T Mobility—Cingular Wireless at the time—at an estimated development cost of US$150 million over thirty months. Apple rejected the "design by committee" approach that had yielded the Motorola ROKR E1, a largely unsuccessful collaboration with Motorola. Instead, Cingular gave Apple the liberty to develop the iPhone's hardware and software in-house.

Jobs unveiled the iPhone to the public on January 9, 2007 at Macworld 2007. Apple was required to file for operating permits with the FCC, but since such filings are made available to the public, the announcement came months before the iPhone had received approval. The iPhone went on sale in the United States on June 29, 2007, at 6:00 pm local time, while hundreds of customers lined up outside the stores nationwide. The original iPhone was made available in the UK, France, and Germany in November 2007, and Ireland and Austria in the spring of 2008.

On July 11, 2008, Apple released the iPhone 3G in twenty-two countries, including the original six. Apple released the iPhone 3G in upwards of eighty countries and territories. Apple announced the iPhone 3GS on June 8, 2009, along with plans to release it later in June, July, and August, starting with the U.S., Canada and major European countries on June 19. Many would-be users objected to the iPhone's cost, and 40% of users have household incomes over US$100,000. In an attempt to gain a wider market, Apple retained the 8 GB iPhone 3G at a lower price point. When Apple introduced the iPhone 4, the 3GS became the less expensive model. Apple reduced the price several times since the iPhone's release in 2007, at which time an 8 GB iPhone sold for $599. An iPhone 3GS with the same capacity now costs $99. However, these numbers are misleading, since all iPhone units sold through AT&T require a two-year contract (costing several hundred dollars), and a SIM lock.

Apple sold 6.1 million original iPhone units over five quarters. The sales has been growing steadily thereafter, by the end of fiscal year 2010, a total of 73.5 million iPhones were sold. Sales in Q4 2008 surpassed temporarily those of RIM's BlackBerry sales of 5.2 million units, which made Apple briefly the third largest mobile phone manufacturer by revenue, after Nokia and Samsung. Approximately 6.4 million iPhones are active in the U.S. alone. While iPhone sales constitute a significant portion of Apple's revenue, some of this income is deferred.

The back of the original iPhone was made of aluminum with a black plastic accent. The iPhone 3G and 3GS feature a full plastic back to increase the strength of the GSM signal. The iPhone 3G was available in an 8 GB black model, or a black or white option for the 16 GB model. They both are now discontinued. The iPhone 3GS was available in both colors, regardless of storage capacity. The white model was discontinued in favor of a black 8 GB low-end model. The iPhone 4 has an aluminosilicate glass front and back with a stainless steel edge that serves as the antennae. It is available in black; a white version was announced, but has as of October 2010 not been released.

The iPhone has garnered positive reviews from critics like David Pogue and Walter Mossberg. The iPhone attracts users of all ages, and besides consumer use the iPhone has also been adopted for business purposes.

Wednesday, December 15, 2010

Windows Azure

Windows Azure is Microsoft's Cloud Services Platform

Building out an infrastructure that supports your web service or application can be expensive, complicated and time consuming. Forecasting the highest possible demand. Building out the network to support your peak times. Getting the right servers in place at the right time, managing and maintaining the systems.

Or you could look to the Microsoft cloud. The Windows Azure platform is a flexible cloud–computing platform that lets you focus on solving business problems and addressing customer needs. No need to invest upfront on expensive infrastructure. Pay only for what you use, scale up when you need capacity and pull it back when you don’t. We handle all the patches and maintenance — all in a secure environment with over 99.9% uptime.

At PDC10 last month, we announced a host of enhancements for Windows Azure designed to make it easier for customers to run existing Windows applications on Windows Azure, enable more affordable platform access and improve the Windows Azure developer and IT Professional experience. Today, we’re happy to announce that several of these are either generally available or ready for you to try as a beta or Community Technology Preview (CTP). Below is a list of what’s now available, along with links to more information.

The following functionality is now generally available through the Windows Azure SDK and Windows Azure Tools for Visual Studio and the new Windows Azure Management Portal:

Development of more complete applications using Windows Azure is now possible with the introduction of Elevated Privileges and Full IIS. Developers can now run a portion or all of their code in Web and Worker roles with elevated administrator privileges. The Web role now provides Full IIS functionality, which enables multiple IIS sites per Web role and the ability to install IIS modules.

Remote Desktop functionality enables customers to connect to a running instance of their application or service in order to monitor activity and troubleshoot common problems.

Windows Server 2008 R2 Roles: Windows Azure now supports Windows Server 2008 R2 in its Web, worker and VM roles. This new support enables you to take advantage of the full range of Windows Server 2008 R2 features such as IIS 7.5, AppLocker, and enhanced command-line and automated management using PowerShell Version 2.0.

Multiple Service Administrators: Windows Azure now supports multiple Windows Live IDs to have administrator privileges on the same Windows Azure account. The objective is to make it easy for a team to work on the same Windows Azure account while using their individual Windows Live IDs.

Better Developer and IT Professional Experience: The following enhancements are now available to help developers see and control how their applications are running in the cloud:

A completely redesigned Silverlight-based Windows Azure portal to ensure an improved and intuitive user experience

Access to new diagnostic information including the ability to click on a role to see role type, deployment time and last reboot time

A new sign-up process that dramatically reduces the number of steps needed to sign up for Windows Azure.

New scenario based Windows Azure Platform forums to help answer questions and share knowledge more efficiently.

We are releasing the following functionality is now available as beta:

Windows Azure Virtual Machine Role: Support for more types of new and existing Windows applications will soon be available with the introduction of the Virtual Machine (VM) role. Customers can move more existing applications to Windows Azure, reducing the need to make costly code or deployment changes.

Extra Small Windows Azure Instance, which is priced at $0.05 per compute hour, provides developers with a cost-effective training and development environment. Developers can also use the Extra Small instance to prototype cloud solutions at a lower cost.

Developers and IT Professionals can sign up for either of the betas above via the Windows Azure Management Portal.

Windows Azure Marketplace is an online marketplace for you to share, buy and sell building block components, premium data sets, training and services needed to build Windows Azure platform applications. The first section in the Windows Azure Marketplace, DataMarket, became commercially available at PDC 10. Today, we’re launching a beta of the application section of the Windows Azure Marketplace with 40 unique partners and over 50 unique applications and services.

Building out an infrastructure that supports your web service or application can be expensive, complicated and time consuming. Forecasting the highest possible demand. Building out the network to support your peak times. Getting the right servers in place at the right time, managing and maintaining the systems.

Or you could look to the Microsoft cloud. The Windows Azure platform is a flexible cloud–computing platform that lets you focus on solving business problems and addressing customer needs. No need to invest upfront on expensive infrastructure. Pay only for what you use, scale up when you need capacity and pull it back when you don’t. We handle all the patches and maintenance — all in a secure environment with over 99.9% uptime.

At PDC10 last month, we announced a host of enhancements for Windows Azure designed to make it easier for customers to run existing Windows applications on Windows Azure, enable more affordable platform access and improve the Windows Azure developer and IT Professional experience. Today, we’re happy to announce that several of these are either generally available or ready for you to try as a beta or Community Technology Preview (CTP). Below is a list of what’s now available, along with links to more information.

The following functionality is now generally available through the Windows Azure SDK and Windows Azure Tools for Visual Studio and the new Windows Azure Management Portal:

Development of more complete applications using Windows Azure is now possible with the introduction of Elevated Privileges and Full IIS. Developers can now run a portion or all of their code in Web and Worker roles with elevated administrator privileges. The Web role now provides Full IIS functionality, which enables multiple IIS sites per Web role and the ability to install IIS modules.

Remote Desktop functionality enables customers to connect to a running instance of their application or service in order to monitor activity and troubleshoot common problems.

Windows Server 2008 R2 Roles: Windows Azure now supports Windows Server 2008 R2 in its Web, worker and VM roles. This new support enables you to take advantage of the full range of Windows Server 2008 R2 features such as IIS 7.5, AppLocker, and enhanced command-line and automated management using PowerShell Version 2.0.

Multiple Service Administrators: Windows Azure now supports multiple Windows Live IDs to have administrator privileges on the same Windows Azure account. The objective is to make it easy for a team to work on the same Windows Azure account while using their individual Windows Live IDs.

Better Developer and IT Professional Experience: The following enhancements are now available to help developers see and control how their applications are running in the cloud:

A completely redesigned Silverlight-based Windows Azure portal to ensure an improved and intuitive user experience

Access to new diagnostic information including the ability to click on a role to see role type, deployment time and last reboot time

A new sign-up process that dramatically reduces the number of steps needed to sign up for Windows Azure.

New scenario based Windows Azure Platform forums to help answer questions and share knowledge more efficiently.

We are releasing the following functionality is now available as beta:

Windows Azure Virtual Machine Role: Support for more types of new and existing Windows applications will soon be available with the introduction of the Virtual Machine (VM) role. Customers can move more existing applications to Windows Azure, reducing the need to make costly code or deployment changes.

Extra Small Windows Azure Instance, which is priced at $0.05 per compute hour, provides developers with a cost-effective training and development environment. Developers can also use the Extra Small instance to prototype cloud solutions at a lower cost.

Developers and IT Professionals can sign up for either of the betas above via the Windows Azure Management Portal.

Windows Azure Marketplace is an online marketplace for you to share, buy and sell building block components, premium data sets, training and services needed to build Windows Azure platform applications. The first section in the Windows Azure Marketplace, DataMarket, became commercially available at PDC 10. Today, we’re launching a beta of the application section of the Windows Azure Marketplace with 40 unique partners and over 50 unique applications and services.

Monday, December 13, 2010

Cloud Computing Deployment Models

Public cloud

Public cloud or external cloud describes cloud computing in the traditional main stream sense, whereby resources are dynamically provisioned on a fine-grained, self-service basis over the Internet, via web applications/web services, from an off-site third-party provider who bills on a fine-grained utility computing basis.

Community cloud

A community cloud may be established where several organizations have similar requirements and seek to share infrastructure so as to realize some of the benefits of cloud computing. With the costs spread over fewer users than a public cloud (but more than a single tenant) this option is more expensive but may offer a higher level of privacy, security and/or policy compliance. Examples of community cloud include Google's "Gov Cloud".

Hybrid cloud

There is some confusion over the term "Hybrid" when applied to the cloud - a standard definition of the term "Hybrid Cloud" has not yet emerged. The term "Hybrid Cloud" has been used to mean either two separate clouds joined together (public, private, internal or external), or a combination of virtualized cloud server instances used together with real physical hardware. The most correct definition of the term "Hybrid Cloud" is probably the use of physical hardware and virtualized cloud server instances together to provide a single common service. Two clouds that have been joined together are more correctly called a "combined cloud".

A combined cloud environment consisting of multiple internal and/or external providers "will be typical for most enterprises". By integrating multiple cloud services users may be able to ease the transition to public cloud services while avoiding issues such as PCI compliance.

Another perspective on deploying a web application in the cloud is using Hybrid Web Hosting, where the hosting infrastructure is a mix between Cloud Hosting and Managed dedicated servers - this is most commonly achieved as part of a web cluster in which some of the nodes are running on real physical hardware and some are running on cloud server instances.

A hybrid storage cloud uses a combination of public and private storage clouds. Hybrid storage clouds are often useful for archiving and backup functions, allowing local data to be replicated to a public cloud.

Private cloud

Douglas Parkhill first described the concept of a "Private Computer Utility" in his 1966 book The Challenge of the Computer Utility. The idea was based upon direct comparison with other industries (e.g. the electricity industry) and the extensive use of hybrid supply models to balance and mitigate risks.

Private cloud and internal cloud have been described as neologisms, however the concepts themselves pre-date the term cloud by 40 years. Even within modern utility industries, hybrid models still exist despite the formation of reasonably well-functioning markets and the ability to combine multiple providers.

Some vendors have used the terms to describe offerings that emulate cloud computing on private networks. These (typically virtualization automation) products offer the ability to host applications or virtual machines in a company's own set of hosts. These provide the benefits of utility computing -shared hardware costs, the ability to recover from failure, and the ability to scale up or down depending upon demand.

Private clouds have attracted criticism because users "still have to buy, build, and manage them" and thus do not benefit from lower up-front capital costs and less hands-on management, essentially " the economic model that makes cloud computing such an intriguing concept".

Public cloud or external cloud describes cloud computing in the traditional main stream sense, whereby resources are dynamically provisioned on a fine-grained, self-service basis over the Internet, via web applications/web services, from an off-site third-party provider who bills on a fine-grained utility computing basis.

Community cloud

A community cloud may be established where several organizations have similar requirements and seek to share infrastructure so as to realize some of the benefits of cloud computing. With the costs spread over fewer users than a public cloud (but more than a single tenant) this option is more expensive but may offer a higher level of privacy, security and/or policy compliance. Examples of community cloud include Google's "Gov Cloud".

Hybrid cloud

There is some confusion over the term "Hybrid" when applied to the cloud - a standard definition of the term "Hybrid Cloud" has not yet emerged. The term "Hybrid Cloud" has been used to mean either two separate clouds joined together (public, private, internal or external), or a combination of virtualized cloud server instances used together with real physical hardware. The most correct definition of the term "Hybrid Cloud" is probably the use of physical hardware and virtualized cloud server instances together to provide a single common service. Two clouds that have been joined together are more correctly called a "combined cloud".

A combined cloud environment consisting of multiple internal and/or external providers "will be typical for most enterprises". By integrating multiple cloud services users may be able to ease the transition to public cloud services while avoiding issues such as PCI compliance.

Another perspective on deploying a web application in the cloud is using Hybrid Web Hosting, where the hosting infrastructure is a mix between Cloud Hosting and Managed dedicated servers - this is most commonly achieved as part of a web cluster in which some of the nodes are running on real physical hardware and some are running on cloud server instances.

A hybrid storage cloud uses a combination of public and private storage clouds. Hybrid storage clouds are often useful for archiving and backup functions, allowing local data to be replicated to a public cloud.

Private cloud

Douglas Parkhill first described the concept of a "Private Computer Utility" in his 1966 book The Challenge of the Computer Utility. The idea was based upon direct comparison with other industries (e.g. the electricity industry) and the extensive use of hybrid supply models to balance and mitigate risks.

Private cloud and internal cloud have been described as neologisms, however the concepts themselves pre-date the term cloud by 40 years. Even within modern utility industries, hybrid models still exist despite the formation of reasonably well-functioning markets and the ability to combine multiple providers.

Some vendors have used the terms to describe offerings that emulate cloud computing on private networks. These (typically virtualization automation) products offer the ability to host applications or virtual machines in a company's own set of hosts. These provide the benefits of utility computing -shared hardware costs, the ability to recover from failure, and the ability to scale up or down depending upon demand.

Private clouds have attracted criticism because users "still have to buy, build, and manage them" and thus do not benefit from lower up-front capital costs and less hands-on management, essentially " the economic model that makes cloud computing such an intriguing concept".

Sunday, December 12, 2010

Cloud Computing

Cloud computing is Internet-based computing, whereby shared resources, software, and information are provided to computers and other devices on demand, as with the electricity grid. Cloud computing is a natural evolution of the widespread adoption of virtualization, Service-oriented architecture and utility computing. Details are abstracted from consumers, who no longer have need for expertise in, or control over, the technology infrastructure "in the cloud" that supports them. Cloud computing describes a new supplement, consumption, and delivery model for IT services based on the Internet, and it typically involves over-the-Internet provision of dynamically scalable and often virtualized resources. It is a byproduct and consequence of the ease-of-access to remote computing sites provided by the Internet. This frequently takes the form of web-based tools or applications that users can access and use through a web browser as if it were a program installed locally on their own computer. NIST provides a somewhat more objective and specific definition here. The term "cloud" is used as a metaphor for the Internet, based on the cloud drawing used in the past to represent the telephone network, and later to depict the Internet in computer network diagrams as an abstraction of the underlying infrastructure it represents. Typical cloud computing providers deliver common business applications online that are accessed from another Web service or software like a Web browser, while the software and data are stored on servers.

Most cloud computing infrastructures consist of services delivered through common centers and built on servers. Clouds often appear as single points of access for consumers' computing needs. Commercial offerings are generally expected to meet quality of service (QoS) requirements of customers, and typically include service level agreements (SLAs). The major cloud service providers include Amazon, Rackspace Cloud, Salesforce, Microsoft and Google. Some of the larger IT firms that are actively involved in cloud computing are Fujitsu, Dell, Red Hat, Hewlett Packard, IBM, VMware and NetApp.

Comparisons

Cloud computing derives characteristics from, but should not be confused with:

Characteristics

The fundamental concept of cloud computing is that the computing is "in the cloud" i.e. that the processing (and the related data) is not in a specified, known or the same place(s). This is in opposition to where the processing takes place in one or more specific servers that are known. All the other concepts mentioned are supplementary or complementary to this concept.

Generally, cloud computing customers do not own the physical infrastructure, instead avoiding capital expenditure by renting usage from a third-party provider. They consume resources as a service and pay only for resources that they use. Many cloud-computing offerings employ the utility computing model, which is analogous to how traditional utility services (such as electricity) are consumed, whereas others bill on a subscription basis. Sharing "perishable and intangible" computing power among multiple tenants can improve utilization rates, as servers are not unnecessarily left idle (which can reduce costs significantly while increasing the speed of application development). A side-effect of this approach is that overall computer usage rises dramatically, as customers do not have to engineer for peak load limits. In addition, "increased high-speed bandwidth" makes it possible to receive the same. The cloud is becoming increasingly associated with small and medium enterprises (SMEs) as in many cases they cannot justify or afford the large capital expenditure of traditional IT. SMEs also typically have less existing infrastructure, less bureaucracy, more flexibility, and smaller capital budgets for purchasing in-house technology. Similarly, SMEs in emerging markets are typically unburdened by established legacy infrastructures, thus reducing the complexity of deploying cloud solutions.

Economics

Cloud computing users avoid capital expenditure (CapEx) on hardware, software, and services when they pay a provider only for what they use. Consumption is usually billed on a utility (resources consumed, like electricity) or subscription (time-based, like a newspaper) basis with little or no upfront cost. Other benefits of this approach are low barriers to entry, shared infrastructure and costs, low management overhead, and immediate access to a broad range of applications. In general, users can terminate the contract at any time (thereby avoiding return on investment risk and uncertainty), and the services are often covered by service level agreements (SLAs) with financial penalties.

According to Nicholas Carr, the strategic importance of information technology is diminishing as it becomes standardized and less expensive. He argues that the cloud computing paradigm shift is similar to the displacement of frozen water trade by electricity generators early in the 20th century.

Although companies might be able to save on upfront capital expenditures, they might not save much and might actually pay more for operating expenses. In situations where the capital expense would be relatively small, or where the organization has more flexibility in their capital budget than their operating budget, the cloud model might not make great fiscal sense. Other factors having an impact on the scale of potential cost savings include the efficiency of a company's data center as compared to the cloud vendor's, the company's existing operating costs, the level of adoption of cloud computing, and the type of functionality being hosted in the cloud.

Among the items that some cloud hosts charge for are instances (often with extra charges for high-memory or high-CPU instances), data transfer in and out, storage (measured by the GB-month), I/O requests, PUT requests and GET requests, IP addresses, and load balancing. In some cases, users can bid on instances, with pricing dependent on demand for available instances.

Architecture

Cloud architecture, the systems architecture of the software systems involved in the delivery of cloud computing, typically involves multiple cloud components communicating with each other over application programming interfaces, usually web services. This resembles the Unix philosophy of having multiple programs each doing one thing well and working together over universal interfaces. Complexity is controlled and the resulting systems are more manageable than their monolithic counterparts.

The two most significant components of cloud computing architecture are known as the front end and the back end. The front end is the part seen by the client, i.e. the computer user. This includes the client’s network (or computer) and the applications used to access the cloud via a user interface such as a web browser. The back end of the cloud computing architecture is the ‘cloud’ itself, comprising various computers, servers and data storage devices.

History

The underlying concept of cloud computing dates back to the 1960s, when John McCarthy opined that "computation may someday be organized as a public utility." Almost all the modern-day characteristics of cloud computing (elastic provision, provided as a utility, online, illusion of infinite supply), the comparison to the electricity industry and the use of public, private, government and community forms was thoroughly explored in Douglas Parkhill's 1966 book, The Challenge of the Computer Utility.

The actual term "cloud" borrows from telephony in that telecommunications companies, who until the 1990s primarily offered dedicated point-to-point data circuits, began offering Virtual Private Network (VPN) services with comparable quality of service but at a much lower cost. By switching traffic to balance utilization as they saw fit, they were able to utilize their overall network bandwidth more effectively. The cloud symbol was used to denote the demarcation point between that which was the responsibility of the provider from that of the user. Cloud computing extends this boundary to cover servers as well as the network infrastructure. The first scholarly use of the term “cloud computing” was in a 1997 lecture by Ramnath Chellappa.

Amazon played a key role in the development of cloud computing by modernizing their data centers after the dot-com bubble, which, like most computer networks, were using as little as 10% of their capacity at any one time, just to leave room for occasional spikes. Having found that the new cloud architecture resulted in significant internal efficiency improvements whereby small, fast-moving "two-pizza teams" could add new features faster and more easily, Amazon initiated a new product development effort to provide cloud computing to external customers, and launched Amazon Web Service (AWS) on a utility computing basis in 2006.

In 2007, Google, IBM and a number of universities embarked on a large scale cloud computing research project. In early 2008, Eucalyptus became the first open source AWS API compatible platform for deploying private clouds. By mid-2008, Gartner saw an opportunity for cloud computing "to shape the relationship among consumers of IT services, those who use IT services and those who sell them" and observed that "organisations are switching from company-owned hardware and software assets to per-use service-based models" so that the "projected shift to cloud computing ... will result in dramatic growth in IT products in some areas and significant reductions in other areas."

Most cloud computing infrastructures consist of services delivered through common centers and built on servers. Clouds often appear as single points of access for consumers' computing needs. Commercial offerings are generally expected to meet quality of service (QoS) requirements of customers, and typically include service level agreements (SLAs). The major cloud service providers include Amazon, Rackspace Cloud, Salesforce, Microsoft and Google. Some of the larger IT firms that are actively involved in cloud computing are Fujitsu, Dell, Red Hat, Hewlett Packard, IBM, VMware and NetApp.

Comparisons

Cloud computing derives characteristics from, but should not be confused with:

- Autonomic computing — "computer systems capable of self-management"

- Client–server model – client–server computing refers broadly to any distributed application that distinguishes between service providers (servers) and service requesters (clients)

- Grid computing — "a form of distributed computing and parallel computing, whereby a 'super and virtual computer' is composed of a cluster of networked, loosely coupled computers acting in concert to perform very large tasks"

- Mainframe computer — powerful computers used mainly by large organizations for critical applications, typically bulk data-processing such as census, industry and consumer statistics, enterprise resource planning, and financial transaction processing.

- Utility computing — the "packaging of computing resources, such as computation and storage, as a metered service similar to a traditional public utility, such as electricity";

- Peer-to-peer – distributed architecture without the need for central coordination, with participants being at the same time both suppliers and consumers of resources (in contrast to the traditional client–server model)

- Service-oriented computing – Cloud computing provides services related to computing while, in a reciprocal manner, service-oriented computing consists of the computing techniques that operate on software-as-a-service.

Characteristics

The fundamental concept of cloud computing is that the computing is "in the cloud" i.e. that the processing (and the related data) is not in a specified, known or the same place(s). This is in opposition to where the processing takes place in one or more specific servers that are known. All the other concepts mentioned are supplementary or complementary to this concept.

Generally, cloud computing customers do not own the physical infrastructure, instead avoiding capital expenditure by renting usage from a third-party provider. They consume resources as a service and pay only for resources that they use. Many cloud-computing offerings employ the utility computing model, which is analogous to how traditional utility services (such as electricity) are consumed, whereas others bill on a subscription basis. Sharing "perishable and intangible" computing power among multiple tenants can improve utilization rates, as servers are not unnecessarily left idle (which can reduce costs significantly while increasing the speed of application development). A side-effect of this approach is that overall computer usage rises dramatically, as customers do not have to engineer for peak load limits. In addition, "increased high-speed bandwidth" makes it possible to receive the same. The cloud is becoming increasingly associated with small and medium enterprises (SMEs) as in many cases they cannot justify or afford the large capital expenditure of traditional IT. SMEs also typically have less existing infrastructure, less bureaucracy, more flexibility, and smaller capital budgets for purchasing in-house technology. Similarly, SMEs in emerging markets are typically unburdened by established legacy infrastructures, thus reducing the complexity of deploying cloud solutions.

Economics

Cloud computing users avoid capital expenditure (CapEx) on hardware, software, and services when they pay a provider only for what they use. Consumption is usually billed on a utility (resources consumed, like electricity) or subscription (time-based, like a newspaper) basis with little or no upfront cost. Other benefits of this approach are low barriers to entry, shared infrastructure and costs, low management overhead, and immediate access to a broad range of applications. In general, users can terminate the contract at any time (thereby avoiding return on investment risk and uncertainty), and the services are often covered by service level agreements (SLAs) with financial penalties.

According to Nicholas Carr, the strategic importance of information technology is diminishing as it becomes standardized and less expensive. He argues that the cloud computing paradigm shift is similar to the displacement of frozen water trade by electricity generators early in the 20th century.

Although companies might be able to save on upfront capital expenditures, they might not save much and might actually pay more for operating expenses. In situations where the capital expense would be relatively small, or where the organization has more flexibility in their capital budget than their operating budget, the cloud model might not make great fiscal sense. Other factors having an impact on the scale of potential cost savings include the efficiency of a company's data center as compared to the cloud vendor's, the company's existing operating costs, the level of adoption of cloud computing, and the type of functionality being hosted in the cloud.

Among the items that some cloud hosts charge for are instances (often with extra charges for high-memory or high-CPU instances), data transfer in and out, storage (measured by the GB-month), I/O requests, PUT requests and GET requests, IP addresses, and load balancing. In some cases, users can bid on instances, with pricing dependent on demand for available instances.

Architecture

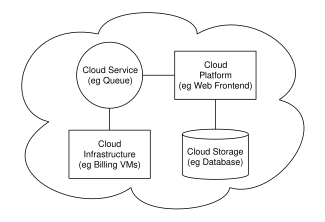

Cloud computing sample architecture

Cloud architecture, the systems architecture of the software systems involved in the delivery of cloud computing, typically involves multiple cloud components communicating with each other over application programming interfaces, usually web services. This resembles the Unix philosophy of having multiple programs each doing one thing well and working together over universal interfaces. Complexity is controlled and the resulting systems are more manageable than their monolithic counterparts.

The two most significant components of cloud computing architecture are known as the front end and the back end. The front end is the part seen by the client, i.e. the computer user. This includes the client’s network (or computer) and the applications used to access the cloud via a user interface such as a web browser. The back end of the cloud computing architecture is the ‘cloud’ itself, comprising various computers, servers and data storage devices.

History

The underlying concept of cloud computing dates back to the 1960s, when John McCarthy opined that "computation may someday be organized as a public utility." Almost all the modern-day characteristics of cloud computing (elastic provision, provided as a utility, online, illusion of infinite supply), the comparison to the electricity industry and the use of public, private, government and community forms was thoroughly explored in Douglas Parkhill's 1966 book, The Challenge of the Computer Utility.

The actual term "cloud" borrows from telephony in that telecommunications companies, who until the 1990s primarily offered dedicated point-to-point data circuits, began offering Virtual Private Network (VPN) services with comparable quality of service but at a much lower cost. By switching traffic to balance utilization as they saw fit, they were able to utilize their overall network bandwidth more effectively. The cloud symbol was used to denote the demarcation point between that which was the responsibility of the provider from that of the user. Cloud computing extends this boundary to cover servers as well as the network infrastructure. The first scholarly use of the term “cloud computing” was in a 1997 lecture by Ramnath Chellappa.

Amazon played a key role in the development of cloud computing by modernizing their data centers after the dot-com bubble, which, like most computer networks, were using as little as 10% of their capacity at any one time, just to leave room for occasional spikes. Having found that the new cloud architecture resulted in significant internal efficiency improvements whereby small, fast-moving "two-pizza teams" could add new features faster and more easily, Amazon initiated a new product development effort to provide cloud computing to external customers, and launched Amazon Web Service (AWS) on a utility computing basis in 2006.

In 2007, Google, IBM and a number of universities embarked on a large scale cloud computing research project. In early 2008, Eucalyptus became the first open source AWS API compatible platform for deploying private clouds. By mid-2008, Gartner saw an opportunity for cloud computing "to shape the relationship among consumers of IT services, those who use IT services and those who sell them" and observed that "organisations are switching from company-owned hardware and software assets to per-use service-based models" so that the "projected shift to cloud computing ... will result in dramatic growth in IT products in some areas and significant reductions in other areas."

Sunday, December 5, 2010

Kinect History

Kinect was first announced on June 1, 2009 at E3 2009 under the code name "Project Natal". Following in Microsoft's tradition of using cities as code names, "Project Natal" was named after the Brazilian city of Natal as a tribute to the country by Microsoft director Alex Kipman, who incubated the project, and who is from Brazil. The name Natal was also chosen because the word natal means "of or relating to birth", reflecting Microsoft's view of the project as "the birth of the next generation of home entertainment".

Four demos were shown to showcase Kinect when it was revealed at Microsoft's E3 2009 Media Briefing: Ricochet, Paint Party, Milo & Kate, and "Mega Ring Ring, Mega Ring Ring". A demo based on Burnout Paradise was also shown outside of Microsoft's media briefing. The skeletal mapping technology shown at E3 2009 was capable of simultaneously tracking four people, with a feature extraction of 48 skeletal points on a human body at 30 Hz.

It was rumored that the launch of Project Natal would be accompanied with the release of a new Xbox 360 console (as either a new retail configuration, a significant design revision and/or a modest hardware upgrade). Microsoft dismissed the reports in public, and repeatedly emphasized that Project Natal would be fully compatible with all Xbox 360 consoles. Microsoft indicated that the company considers it to be a significant initiative, as fundamental to the Xbox brand as Xbox Live, and with a launch akin to that of a new Xbox console platform. Kinect was even referred to as a "new Xbox" by Microsoft CEO Steve Ballmer at a speech for the Executives' Club of Chicago. When asked if the introduction will extend the time before the next-generation console platform is launched (historically about 5 years between platforms), Microsoft corporate vice president Shane Kim reaffirmed that the company believes that the life cycle of the Xbox 360 will last through 2015 (10 years).

During Kinect's development, project team members experimentally adapted numerous games to Kinect-based control schemes to help evaluate usability. Among these games were Beautiful Katamari and Space Invaders Extreme, which were demonstrated at the Tokyo Game Show in September 2009. According to creative director Kudo Tsunoda, adding Kinect-based control to pre-existing games would involve significant code alterations, making it unlikely for Kinect features to be added through software updates.

Although the sensor unit was originally planned to contain a microprocessor that would perform operations such as the system's skeletal mapping, it was revealed in January 2010 that the sensor would no longer feature a dedicated processor. Instead processing would be handled by one of the processor cores of the Xbox 360's Xenon CPU. According to Alex Kipman, the Kinect system consumes about 10-15% of the Xbox 360's computing resources. However, in November, Alex Kipman made a statement that "the new motion control tech now only uses a single-digit percentage of the Xbox 360's processing power, down from the previously stated ten to 15 percent." A number of observers commented that the computational load required for Kinect makes the addition of Kinect functionality to pre-existing games through software updates even less likely, with Kinect-specific concepts instead likely to be the focus for developers using the platform.

On March 25, Microsoft sent out a save the date flier for an event called the "World Premiere 'Project Natal' for the Xbox 360 Experience" at E3 2010. The event took place on the evening of Sunday, June 13, 2010 at the Galen Center. The event featured a performance by Cirque du Soleil. It was announced that the system would officially be called Kinect, a portmanteau of the words "kinetic" and "connect", which describe key aspects of the initiative. Microsoft also announced that the North American launch date for Kinect will be November 4, 2010. Despite previous statements dismissing speculation of a new Xbox 360 to accompany the launch of new control system, Microsoft announced at E3 2010 that it was introducing a redesigned Xbox 360, complete with a Kinect-ready connector port. In addition, on July 20, 2010, Microsoft announced a Kinect bundle with a redesigned Xbox 360, to be available with the Kinect launch.

Launch

Microsoft has an advertising budget of US$500 million for the launch of Kinect, a larger sum than the investment at launch of the Xbox console. Plans involve featuring Kinect on the YouTube homepage, advertisements on Disney's and Nickelodeon's digital publications as well as during Dancing with the Stars and Glee. Print ads are to be published in People Magazine and InStyle, while brands such as Pepsi, Kellogg's and Burger King will also carry Kinect advertisements. A major Kinect event was also organized in Times Square, where Kinect was promoted via the many billboards.

On October 19, before the Kinect launch, Microsoft advertised Kinect at The Oprah Winfrey Show by giving free Xbox 360s and Kinect sensors to the people who were in the audience. Later they also gave away Kinect bundles with Xbox 360's to the audiences of The Ellen Show and Late Night With Jimmy Fallon.

On October 23, Microsoft held a pre-launch party for Kinect in Beverly Hills. The party was hosted by Ashley Tisdale and was attended by soccer star David Beckham and his three sons, Cruz, Brooklyn, and Romeo. Guests were treated to sessions with Dance Central and Kinect Adventures, followed by Tisdale having a Kinect voice chat with Nick Cannon. On November 1, 2010, Burger King started giving away a free Kinect bundle "every 15 minutes". The contest ended on November 28, 2010.

Four demos were shown to showcase Kinect when it was revealed at Microsoft's E3 2009 Media Briefing: Ricochet, Paint Party, Milo & Kate, and "Mega Ring Ring, Mega Ring Ring". A demo based on Burnout Paradise was also shown outside of Microsoft's media briefing. The skeletal mapping technology shown at E3 2009 was capable of simultaneously tracking four people, with a feature extraction of 48 skeletal points on a human body at 30 Hz.

It was rumored that the launch of Project Natal would be accompanied with the release of a new Xbox 360 console (as either a new retail configuration, a significant design revision and/or a modest hardware upgrade). Microsoft dismissed the reports in public, and repeatedly emphasized that Project Natal would be fully compatible with all Xbox 360 consoles. Microsoft indicated that the company considers it to be a significant initiative, as fundamental to the Xbox brand as Xbox Live, and with a launch akin to that of a new Xbox console platform. Kinect was even referred to as a "new Xbox" by Microsoft CEO Steve Ballmer at a speech for the Executives' Club of Chicago. When asked if the introduction will extend the time before the next-generation console platform is launched (historically about 5 years between platforms), Microsoft corporate vice president Shane Kim reaffirmed that the company believes that the life cycle of the Xbox 360 will last through 2015 (10 years).

During Kinect's development, project team members experimentally adapted numerous games to Kinect-based control schemes to help evaluate usability. Among these games were Beautiful Katamari and Space Invaders Extreme, which were demonstrated at the Tokyo Game Show in September 2009. According to creative director Kudo Tsunoda, adding Kinect-based control to pre-existing games would involve significant code alterations, making it unlikely for Kinect features to be added through software updates.

Although the sensor unit was originally planned to contain a microprocessor that would perform operations such as the system's skeletal mapping, it was revealed in January 2010 that the sensor would no longer feature a dedicated processor. Instead processing would be handled by one of the processor cores of the Xbox 360's Xenon CPU. According to Alex Kipman, the Kinect system consumes about 10-15% of the Xbox 360's computing resources. However, in November, Alex Kipman made a statement that "the new motion control tech now only uses a single-digit percentage of the Xbox 360's processing power, down from the previously stated ten to 15 percent." A number of observers commented that the computational load required for Kinect makes the addition of Kinect functionality to pre-existing games through software updates even less likely, with Kinect-specific concepts instead likely to be the focus for developers using the platform.

On March 25, Microsoft sent out a save the date flier for an event called the "World Premiere 'Project Natal' for the Xbox 360 Experience" at E3 2010. The event took place on the evening of Sunday, June 13, 2010 at the Galen Center. The event featured a performance by Cirque du Soleil. It was announced that the system would officially be called Kinect, a portmanteau of the words "kinetic" and "connect", which describe key aspects of the initiative. Microsoft also announced that the North American launch date for Kinect will be November 4, 2010. Despite previous statements dismissing speculation of a new Xbox 360 to accompany the launch of new control system, Microsoft announced at E3 2010 that it was introducing a redesigned Xbox 360, complete with a Kinect-ready connector port. In addition, on July 20, 2010, Microsoft announced a Kinect bundle with a redesigned Xbox 360, to be available with the Kinect launch.

Launch

Microsoft has an advertising budget of US$500 million for the launch of Kinect, a larger sum than the investment at launch of the Xbox console. Plans involve featuring Kinect on the YouTube homepage, advertisements on Disney's and Nickelodeon's digital publications as well as during Dancing with the Stars and Glee. Print ads are to be published in People Magazine and InStyle, while brands such as Pepsi, Kellogg's and Burger King will also carry Kinect advertisements. A major Kinect event was also organized in Times Square, where Kinect was promoted via the many billboards.

On October 19, before the Kinect launch, Microsoft advertised Kinect at The Oprah Winfrey Show by giving free Xbox 360s and Kinect sensors to the people who were in the audience. Later they also gave away Kinect bundles with Xbox 360's to the audiences of The Ellen Show and Late Night With Jimmy Fallon.

On October 23, Microsoft held a pre-launch party for Kinect in Beverly Hills. The party was hosted by Ashley Tisdale and was attended by soccer star David Beckham and his three sons, Cruz, Brooklyn, and Romeo. Guests were treated to sessions with Dance Central and Kinect Adventures, followed by Tisdale having a Kinect voice chat with Nick Cannon. On November 1, 2010, Burger King started giving away a free Kinect bundle "every 15 minutes". The contest ended on November 28, 2010.

Kinect

Kinect for Xbox 360, or simply Kinect (originally known by the code name Project Natal), is a "controller-free gaming and entertainment experience" by Microsoft for the Xbox 360 video game platform, and may later be supported by PCs via Windows 8. Based around a webcam-style add-on peripheral for the Xbox 360 console, it enables users to control and interact with the Xbox 360 without the need to touch a game controller, through a natural user interface using gestures, spoken commands, or presented objects and images. The project is aimed at broadening the Xbox 360's audience beyond its typical gamer base. Kinect competes with the Wii Remote with Wii MotionPlus and PlayStation Move & PlayStation Eye motion control systems for the Wii and PlayStation 3 home consoles, respectively.

Kinect was launched in North America on November 4, 2010, in Europe on November 10, 2010, in Australia, New Zealand and Singapore on November 18, 2010 and in Japan on November 20, 2010. Purchase options for the sensor peripheral include a bundle with the game Kinect Adventures and console bundles with either a 4 GB or 250 GB Xbox 360 console and Kinect Adventures.

As of November 29, 2010, 2.5 million Kinect sensors have been sold.

Technology

Kinect is based on software technology developed internally by Rare, a subsidiary of Microsoft Game Studios owned by Microsoft and range camera technology by Israeli developer PrimeSense, which interprets 3D scene information from a continuously-projected infrared structured light.

The Kinect sensor is a horizontal bar connected to a small base with a motorized pivot, and is designed to be positioned lengthwise above or below the video display. The device features an "RGB camera, depth sensor and multi-array microphone running proprietary software", which provides full-body 3D motion capture, facial recognition and voice recognition capabilities. Voice recognition capabilities will be available in Japan, the United Kingdom, Canada and the United States at launch, but have been postponed until spring 2011 in mainland Europe. The Kinect sensor's microphone array enables the Xbox 360 to conduct acoustic source localization and ambient noise suppression, allowing for things such as headset-free party chat over Xbox Live.

The depth sensor consists of an infrared laser projector combined with a monochrome CMOS sensor, and allows the Kinect sensor to see in 3D under any ambient light conditions. The sensing range of the depth sensor is adjustable, with the Kinect software capable of automatically calibrating the sensor based on gameplay and the player's physical environment, such as the presence of furniture.

Described by Microsoft personnel as the primary innovation of Kinect, the software technology enables advanced gesture recognition, facial recognition, and voice recognition. According to information supplied to retailers, the Kinect is capable of simultaneously tracking up to six people, including two active players for motion analysis with a feature extraction of 20 joints per player.

Through reverse engineering efforts, it has been determined that the Kinect sensor outputs video at a frame rate of 30 Hz, with the RGB video stream at 8-bit VGA resolution (640 × 480 pixels) with a Bayer color filter, and the monochrome video stream used for depth sensing at 11-bit VGA resolution (640 × 480 pixels with 2,048 levels of sensitivity). The Kinect sensor has a practical ranging limit of 1.2–3.5 metres (3.9–11 ft) distance when used with the Xbox software. The area required to play Kinect is roughly around a 6m² area, although the sensor can maintain tracking through an extended range of approximately 0.7–6 metres (2.3–20 ft). The sensor has an angular field of view of 57° horizontally and a 43° vertically, while the motorized pivot is capable of tilting the sensor as much as 27° either up or down. The microphone array features four microphone capsules, and operates with each channel processing 16-bit audio at a sampling rate of 16 kHz.

Because the Kinect sensor's motorized tilt mechanism requires more power than can be supplied via the Xbox 360's USB ports, the Kinect sensor features a proprietary connector combining USB communication with additional power. Redesigned "Xbox 360 S" models include a special AUX port for accommodating the connector, while older models require a special power supply cable (included with the sensor) which splits the connection into separate USB and power connections; power is supplied from the mains by way of an AC adapter.

Kinect was launched in North America on November 4, 2010, in Europe on November 10, 2010, in Australia, New Zealand and Singapore on November 18, 2010 and in Japan on November 20, 2010. Purchase options for the sensor peripheral include a bundle with the game Kinect Adventures and console bundles with either a 4 GB or 250 GB Xbox 360 console and Kinect Adventures.

As of November 29, 2010, 2.5 million Kinect sensors have been sold.

Technology

Kinect is based on software technology developed internally by Rare, a subsidiary of Microsoft Game Studios owned by Microsoft and range camera technology by Israeli developer PrimeSense, which interprets 3D scene information from a continuously-projected infrared structured light.

The Kinect sensor is a horizontal bar connected to a small base with a motorized pivot, and is designed to be positioned lengthwise above or below the video display. The device features an "RGB camera, depth sensor and multi-array microphone running proprietary software", which provides full-body 3D motion capture, facial recognition and voice recognition capabilities. Voice recognition capabilities will be available in Japan, the United Kingdom, Canada and the United States at launch, but have been postponed until spring 2011 in mainland Europe. The Kinect sensor's microphone array enables the Xbox 360 to conduct acoustic source localization and ambient noise suppression, allowing for things such as headset-free party chat over Xbox Live.

The depth sensor consists of an infrared laser projector combined with a monochrome CMOS sensor, and allows the Kinect sensor to see in 3D under any ambient light conditions. The sensing range of the depth sensor is adjustable, with the Kinect software capable of automatically calibrating the sensor based on gameplay and the player's physical environment, such as the presence of furniture.

Described by Microsoft personnel as the primary innovation of Kinect, the software technology enables advanced gesture recognition, facial recognition, and voice recognition. According to information supplied to retailers, the Kinect is capable of simultaneously tracking up to six people, including two active players for motion analysis with a feature extraction of 20 joints per player.

Through reverse engineering efforts, it has been determined that the Kinect sensor outputs video at a frame rate of 30 Hz, with the RGB video stream at 8-bit VGA resolution (640 × 480 pixels) with a Bayer color filter, and the monochrome video stream used for depth sensing at 11-bit VGA resolution (640 × 480 pixels with 2,048 levels of sensitivity). The Kinect sensor has a practical ranging limit of 1.2–3.5 metres (3.9–11 ft) distance when used with the Xbox software. The area required to play Kinect is roughly around a 6m² area, although the sensor can maintain tracking through an extended range of approximately 0.7–6 metres (2.3–20 ft). The sensor has an angular field of view of 57° horizontally and a 43° vertically, while the motorized pivot is capable of tilting the sensor as much as 27° either up or down. The microphone array features four microphone capsules, and operates with each channel processing 16-bit audio at a sampling rate of 16 kHz.

Because the Kinect sensor's motorized tilt mechanism requires more power than can be supplied via the Xbox 360's USB ports, the Kinect sensor features a proprietary connector combining USB communication with additional power. Redesigned "Xbox 360 S" models include a special AUX port for accommodating the connector, while older models require a special power supply cable (included with the sensor) which splits the connection into separate USB and power connections; power is supplied from the mains by way of an AC adapter.

Friday, December 3, 2010

HTML5 video

HTML5 video is an element introduced in the HTML5 draft specification for the purpose of playing videos or movies[1], partially replacing the object element.

Adobe Flash Player is widely used to embed video on web sites such as YouTube, since many web browsers have Adobe's Flash Player pre-installed (with exceptions such as the browsers on the Apple iPhone and iPad and on Android 2.1 or less). HTML5 video is intended by its creators to become the new standard way to show video online, but has been hampered by lack of agreement as to which video formats should be supported in the video tag.

Supported video formats

The current HTML5 draft specification does not specify which video formats browsers should support in the video tag. User agents are free to support any video formats they feel are appropriate.

Default video format debate

Main article: Use of Ogg formats in HTML5

It is desirable to specify at least one video format which all user agents (browsers) should support. The ideal format should:

Have good compression, good image quality, and low decode processor use.

Be royalty-free.

In addition to software decoders, a hardware video decoder should exist for the format, as many embedded processors do not have the performance to decode video.

Initially, Ogg Theora was the recommended standard video format in HTML5, because it was not affected by any known patents. But on December 10, 2007, the HTML5 specification was updated, replacing the reference to concrete formats:

User agents should support Theora video and Vorbis audio, as well as the Ogg container format.

with a placeholder:

It would be helpful for interoperability if all browsers could support the same codecs. However, there are no known codecs that satisfy all the current players: we need a codec that is known to not require per-unit or per-distributor licensing, that is compatible with the open source development model, that is of sufficient quality as to be usable, and that is not an additional submarine patent risk for large companies. This is an ongoing issue and this section will be updated once more information is available.

Although Theora is not affected by known patents, companies such as Apple and (reportedly) Nokia are concerned about unknown patents that might affect it, whose owners might be waiting for a corporation with extensive financial resources to use the format before suing. Formats like H.264 might also be subject to unknown patents in principle, but they have been deployed much more widely and so it is presumed that any patent-holders would have already sued someone. Apple has also opposed requiring Ogg format support in the HTML standard (even as a "should" requirement) on the grounds that some devices might support other formats much more easily, and that HTML has historically not required particular formats for anything.

Some web developers criticized the removal of the Ogg formats from the specification. A follow-up discussion also occurred on the W3C questions and answers blog.

H.264/MPEG-4 AVC is widely used, and has good speed, compression, hardware decoders, and video quality, but is covered by patents. Except in particular cases, users of H.264 have to pay licensing fees to the MPEG LA, a group of patent-holders including Microsoft and Apple. As a result, it has not been considered as a required default codec.

Google's acquisition of On2 resulted in the WebM Project, a royalty-free, open source release of VP8, in a Matroska container with Vorbis audio. It is supported by Google Chrome, Opera Browser and Mozilla Firefox.

Adobe Flash Player is widely used to embed video on web sites such as YouTube, since many web browsers have Adobe's Flash Player pre-installed (with exceptions such as the browsers on the Apple iPhone and iPad and on Android 2.1 or less). HTML5 video is intended by its creators to become the new standard way to show video online, but has been hampered by lack of agreement as to which video formats should be supported in the video tag.

Supported video formats

The current HTML5 draft specification does not specify which video formats browsers should support in the video tag. User agents are free to support any video formats they feel are appropriate.

Default video format debate

Main article: Use of Ogg formats in HTML5

It is desirable to specify at least one video format which all user agents (browsers) should support. The ideal format should:

Have good compression, good image quality, and low decode processor use.

Be royalty-free.

In addition to software decoders, a hardware video decoder should exist for the format, as many embedded processors do not have the performance to decode video.

Initially, Ogg Theora was the recommended standard video format in HTML5, because it was not affected by any known patents. But on December 10, 2007, the HTML5 specification was updated, replacing the reference to concrete formats:

User agents should support Theora video and Vorbis audio, as well as the Ogg container format.

with a placeholder:

It would be helpful for interoperability if all browsers could support the same codecs. However, there are no known codecs that satisfy all the current players: we need a codec that is known to not require per-unit or per-distributor licensing, that is compatible with the open source development model, that is of sufficient quality as to be usable, and that is not an additional submarine patent risk for large companies. This is an ongoing issue and this section will be updated once more information is available.

Although Theora is not affected by known patents, companies such as Apple and (reportedly) Nokia are concerned about unknown patents that might affect it, whose owners might be waiting for a corporation with extensive financial resources to use the format before suing. Formats like H.264 might also be subject to unknown patents in principle, but they have been deployed much more widely and so it is presumed that any patent-holders would have already sued someone. Apple has also opposed requiring Ogg format support in the HTML standard (even as a "should" requirement) on the grounds that some devices might support other formats much more easily, and that HTML has historically not required particular formats for anything.

Some web developers criticized the removal of the Ogg formats from the specification. A follow-up discussion also occurred on the W3C questions and answers blog.

H.264/MPEG-4 AVC is widely used, and has good speed, compression, hardware decoders, and video quality, but is covered by patents. Except in particular cases, users of H.264 have to pay licensing fees to the MPEG LA, a group of patent-holders including Microsoft and Apple. As a result, it has not been considered as a required default codec.

Google's acquisition of On2 resulted in the WebM Project, a royalty-free, open source release of VP8, in a Matroska container with Vorbis audio. It is supported by Google Chrome, Opera Browser and Mozilla Firefox.

Thursday, December 2, 2010

Spike Video Game Awards Previous Winners

2009 Awards

The 2009 VGAs were held on December 12, 2009 at the Nokia Event Deck in Los Angeles, California[5] and were the first VGAs to not have an overall host.[6] It opened with a trailer announcing the sequel to Batman Arkham Asylum. There were other exclusive looks at Prince of Persia: The Forgotten Sands, UFC 2010 Undisputed, Halo: Reach, and others. Samuel L. Jackson previewed LucasArts newest upcoming Star Wars game, Star Wars: The Force Unleashed II. In addition, Green Day: Rock Band was announced and accompanied with a trailer.

With appearances from Stevie Wonder, MTV Jersey Shore cast, Green Day and Jack Black, live music performances at the 2009 awards included Snoop Dogg and The Bravery.

Game of the Year: Uncharted 2: Among Thieves

Studio of the Year: Rocksteady Studios

Best Independent Game Fueled by Dew: Flower

Best Xbox 360 Game: Left 4 Dead 2

Best PS3 Game: Uncharted 2: Among Thieves

Best Wii Game: New Super Mario Bros. Wii

Best PC Game: Dragon Age: Origins

Best Handheld Game: Grand Theft Auto: Chinatown Wars

Best Shooter: Call of Duty: Modern Warfare 2

Best Action Adventure Game: Assassin's Creed II

Best RPG: Dragon Age: Origins

Best Multiplayer Game: Call of Duty: Modern Warfare 2

Best Fighting Game: Street Fighter IV

Best Individual Sports Game: UFC 2009 Undisputed

Best Team Sports Game: FIFA 10

Best Driving Game: Forza Motorsport 3

Best Music Game: The Beatles: Rock Band

Best Soundtrack: DJ Hero

Best Original Score: Halo 3: ODST

Best Graphics: Uncharted 2: Among Thieves

Best Game Based On A Movie/TV Show: South Park Let's Go Tower Defense Play!

Best Performance By A Human Female: Megan Fox as Mikaela Banes in Transformers: Revenge of the Fallen

Best Performance By A Human Male: Hugh Jackman as Wolverine in X-Men Origins: Wolverine

Best Cast: X-Men Origins: Wolverine

Best Voice: Jack Black for the voice of Eddie Riggs in Brütal Legend

Best Downloadable Game: Shadow Complex

Best DLC: Grand Theft Auto: The Ballad of Gay Tony

Most Anticipated Game of 2010: God of War III

2008 Awards